KeLP Sequence Learning

In this page we will learn how to use KeLP to build a sequential classifier. In fact, problems, where the involved input and outputs are sequences, are frequent in many fields. For example, problems like speech and hand-written recognition, protein secondary structure prediction or part-of-speech tagging, can be all treated with a sequence learning framework. In machine learning, such problems led to the definition of specific learning frameworks, where sequences are the main input/output of the algorithms. It makes the sequence learning different from classical pattern classification approaches. In the case of sequence problems, input/output sequences are strongly related each other.

Here we are going to provide a guide to adopt the sequence learning capabilities offered by the KeLP framework. In the next section, we are going to provide a brief introduction to the math behind the KeLP implementation, also described in (Castellucci et al., 2015).

If you are not interested in the math stuff, you can safely skip to the third section.

A hybrid discriminative-generative framework

The sequence labeling approach adopted in KeLP is based on an hybrid generative-discriminative framework. In particular, the model is made of a discriminative multi-classifier based on SVM, which is used to estimate the parameters of a Hidden Markov Model. The sequence classifier is obtained by applying the Viterbi algorithm on the trellis built starting from the SVM estimates. This formulation is inspired by the SVM-HMM classifier (Altun et al, 2003). The main difference is in the underlying optimization formulation, that in the case of SVM-HMM follows the Structured Learning paradigm proposed in (Joachims et al, 2009).

Let us consider an input sequence ![]() of observations, where

of observations, where ![]() is a feature vector of some properties characterizing the instance

is a feature vector of some properties characterizing the instance ![]() . Then, each sequence is associated to a label or tag sequence

. Then, each sequence is associated to a label or tag sequence ![]() (with

(with ![]() and

and ![]() ). The aim of this model is to provide a learning schema, that is able to induce a classification function

). The aim of this model is to provide a learning schema, that is able to induce a classification function ![]() that given

that given ![]() is able to find the correct

is able to find the correct ![]() , i.e.

, i.e. ![]() .

.

The final aim is to make the classification of an instance ![]() dependent from the label assigned to the previous elements in a history of length

dependent from the label assigned to the previous elements in a history of length ![]() , i.e

, i.e ![]() . Given this history, a sequence of

. Given this history, a sequence of ![]() labels can be retrieved, in the form

labels can be retrieved, in the form ![]() . In order to make the classification of

. In order to make the classification of ![]() dependent also from the history, we can augment the feature vector of

dependent also from the history, we can augment the feature vector of ![]() introducing a vector of transitions

introducing a vector of transitions ![]() : it is a boolean vector where the dimensions corresponding to the

: it is a boolean vector where the dimensions corresponding to the ![]() labels preceding the target element

labels preceding the target element ![]() are set to

are set to ![]() . It means that in the vector representations of an instance

. It means that in the vector representations of an instance ![]() , we add specific features representing the transitions corresponding to the current label sequence.

, we add specific features representing the transitions corresponding to the current label sequence.

A projection function ![]() is thus defined to consider both the observations, i.e.

is thus defined to consider both the observations, i.e. ![]() and the transitions

and the transitions ![]() in a history of size

in a history of size ![]() by concatenating the two representation, i.e.:

by concatenating the two representation, i.e.:

![]()

with ![]() and

and ![]() leaves intact the original feature space.

leaves intact the original feature space.

At training time, a One-Vs-All schema over the feature space derived by ![]() can be adopted. In this way, for each

can be adopted. In this way, for each ![]() a linear classifier

a linear classifier ![]() is learned. The

is learned. The ![]() function is computed for each element

function is computed for each element ![]() by exploiting the gold label sequences.

by exploiting the gold label sequences.

At classification time, all possible sequences ![]() should be considered in order to determine the best labeling

should be considered in order to determine the best labeling ![]() , where

, where ![]() is the size of the history used to enrich

is the size of the history used to enrich ![]() , that is:

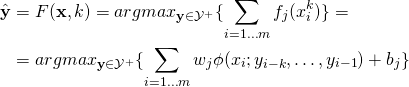

, that is:

(1)

In order to reduce the computational cost, a \textit{Viterbi-like decoding algorithm} is adopted to derive the sequence, and thus build the augmented feature vectors through the ![]() function.

function.

An example of sequence learning in KeLP

The following example is based on the class available in the ![]() project

project

|

1 |

it.uniroma2.sag.kelp.examples.demo.seqlearn.SequenceLearningLinearMain |

and

|

1 |

it.uniroma2.sag.kelp.examples.demo.seqlearn.SequenceLearningKernelMain |

In practice, given a dataset (a SequenceDataset) of SequenceExamples where each item in the sequence is represented as a set of Representations, the Sequence Learning framework in KeLP implements a learning process operating in the feature space. During the training step, each example is enriched to consider its history, in terms of classes assigned to the previous elements of the sequence. During the tagging process, the history of an example is dynamically estimated by a classifier and the entire sequence of labels is derived through a Viterbi decoding step combined with a beam search strategy.

The main data structure used in a Sequence Learning task is the SequenceDataset. It is made by a list of SequenceExample objects, each made by a list of Examples.

An Example models a single element of a sequence, for example a token in a part-of-speech tagging task. A SequenceExample models an entire sequence, e.g., the sentence in the part-of-speech tagging context. Finally, the SequenceDataset is used to model a dataset of sequences.

KeLP offers utility methods to load such object structure with a simple and intuitive interface, which is show in the following snippet:

|

1 2 3 |

String inputTrainFilePath = "THE PATH OF YOUR DATASET"; SequenceDataset sequenceTrainDataset = new SequenceDataset(); sequenceTrainDataset.populate(inputTrainFilePath); |

It requires that the dataset is provided in a specific format, where each line is used to represent an element of a sequence along with its label, and each sequence is separated by a blank line. For example, the following snippet represents two sequences, the first composed by 5 elements and the second made by 4 elements. The first token of each SequenceExample is the label associated to that element of the sequence. In the following snippet the label of the first Example of the first sequence is 19, the label of the second Example of the sequence is 31, and so on…

Then, each Example is represented through a list of vectors, as the ones that has already been described at this page.

|

1 2 3 4 5 6 7 8 9 10 |

19 |BV:rep| 5:1 23:1 84:1 576:1 1657:1 |EV| |BS:word| he |ES| 31 |BV:rep| 26:1 30:1 83:1 88:1 1431:1 1432:1 1658:1 |EV| |BS:word| has |ES| 29 |BV:rep| 7:1 8:1 9:1 10:1 39:1 87:1 150:1 1433:1 1434:1 3711:1 3712:1 3713:1 |EV| |BS:word| excited |ES| 9 |BV:rep| 15:1 16:1 17:1 18:1 29:1 154:1 1687:1 1688:1 3714:1 3715:1 3716:1 3717:1 3718:1 3719:1 3720:1 3721:1 3722:1 |EV| |BS:word| domestic |ES| 15 |BV:rep| 30:1 38:1 483:1 484:1 485:1 1265:1 1573:1 1693:1 1694:1 3723:1 3724:1 3725:1 3726:1 3727:1 3728:1 3729:1 3730:1 3731:1 3732:1 3733:1 3734:1 3735:1 3736:1 |EV| |BS:word| insurrections |ES| 3 |BV:rep| 5:1 83:1 247:1 248:1 576:1 3977:1 |EV| |BS:word| nor |ES| 30 |BV:rep| 51:1 84:1 87:1 250:1 251:1 271:1 321:1 322:1 3978:1 |EV| |BS:word| have |ES| 19 |BV:rep| 23:1 58:1 84:1 88:1 278:1 323:1 324:1 577:1 |EV| |BS:word| we |ES| 29 |BV:rep| 26:1 47:1 51:1 88:1 135:1 136:1 578:1 1435:1 |EV| |BS:word| been |ES| |

The KeLP sequential learning process requires the specification of a number of parameters.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 |

/* * This variable determines the number of previous example considered * in the learning/labeling process. If this variable is set to 0, the * learning process corresponds to a traditional multi-class classification * schema. */ int transitionsOrder = 1; /* * This variable determines the importance of the transition-based * features during the learning process. Higher values will assign * more importance to the transitions. */ float weight = 1f; /* * The size of the beam to be used in the decoding process. This number * determines the number of possible sequences produced in the labeling * process. Notice that higher beam values will result in slower * computations. */ int beamSize = 5; /* * During the labeling process, each item is classified with respect to * the target classes. To reduce the complexity of the labeling process, * this variable determines the number of classes to maintain at each step, * i.e., the ones that receive the highest classification scores * are considered after the classification step in the Viterbi Decoding. */ int maxEmissionCandidates = 3; |

Before the Viterbi decoding, a base learning algorithm is needed in order to classify each examples to one of the target classes. Each of the learning algorithms implementing the ClassificationLearningAlgorithm interface (described at this page) can be adopted.

In the case that a learning algorithm operating in the primal space is adopted (such as the DCDLearningAlgorithm) the SequenceClassificationLinearLearningAlgorithm needs to be instantiated, in combination with methods implementing a LinarMethod. This option is preferred when no complex kernel is required. Moreover, it is useful for efficiency reasons: the time/space required by the base learning algorithm in the training process depends on the number of Examples stored in the SequenceExamples; if this number grows, it is worth to adopt efficient method, such as DCDLearningAlgorithm or LiblinearLearningAlgorithm. In this case, the learning method can operate only over a geometrical representation (i.e, a Vector).

|

1 2 3 4 5 |

float cSVM = 1f; DCDLearningAlgorithm instanceClassifierLearningAlgorithm = new DCDLearningAlgorithm(cSVM, cSVM, DCDLoss.L1, false, 50, originalRepresentationName); SequenceClassificationLearningAlgorithm sequenceClassificationLearningAlgorithm = new SequenceClassificationLinearLearningAlgorithm(instanceClassifierLearningAlgorithm, transitionsOrder, weight); sequenceClassificationLearningAlgorithm.setBeamSize(beamSize); sequenceClassificationLearningAlgorithm.setMaxEmissionCandidates(maxEmissionCandidates); |

In order to use a kernel based method, the following code is required. Obviously, a kernel operating over the (possibile multiple) representations from the Examples in the SequenceExamples need to be implemented. It is suggested to adopt a KernelCache, that needs to be passed directly to the learning algorithm.

|

1 2 3 4 5 6 7 8 9 10 |

//Instance classifier: Kernel based version float cSVM = 1f; Kernel representationKernel = new LinearKernel(originalRepresentationName); BinaryCSvmClassification binaryCSvmClassification = new BinaryCSvmClassification(representationKernel, null, cSVM, cSVM); // Sequence classifier SequenceClassificationKernelBasedLearningAlgorithm sequenceClassificationLearningAlgorithm = new SequenceClassificationKernelBasedLearningAlgorithm(binaryCSvmClassification, transitionsOrder, weight); sequenceClassificationLearningAlgorithm.setBeamSize(beamSize); sequenceClassificationLearningAlgorithm.setMaxEmissionCandidates(maxEmissionCandidates); sequenceClassificationLearningAlgorithm.setKernelCache(new SimpleDynamicKernelCache()); |

In both of the above cases, the ![]() method initiates the learning process, that will produce a dedicated prediction function, called SequencePredictionFunction.

method initiates the learning process, that will produce a dedicated prediction function, called SequencePredictionFunction.

|

1 2 |

sequenceClassificationLearningAlgorithm.learn(sequenceTrainDataset); SequencePredictionFunction predictionFunction = (SequencePredictionFunction) sequenceClassificationLearningAlgorithm.getPredictionFunction(); |

The above prediction function can be easily used to tag a new sequence, obtaining a dedicated object, i.e., a SequencePrediction. The most sequence obtained by the Viterbi Decoding can be accessed via the ![]() method.

method.

|

1 2 3 |

SequenceExample sequenceExample = (SequenceExample) example; SequencePrediction sequencePrediction = (SequencePrediction) predictionFunction.predict(sequenceExample); System.out.println(sequencePrediction.bestPath()); |

References

Castellucci Giuseppe, Andrea Vanzo, Danilo Croce, Roberto Basili. (2015) Context-aware Models for Twitter Sentiment Analysis. IJCoL vol. 1, n. 1 december 2015: Emerging Topics at the First Italian Conference on Computational Linguistics. 2015

Joachims, T., Finley, T., and Yu, C.-N. (2009). Cutting-plane training of structural svms. Machine Learning. 77(1), 27–59.

Altun, Y., Tsochantaridis, I., and Hofmann, T. (2003). Hidden Markov support vector machines. In Proceedings of the International Conference on Machine Learning.